Recently, VMware released the Docker Volume Driver for vSphere 1.0 beta, which enabled a Docker host to create volumes directly on a vSphere datastore (Virtual SAN, VMFS, NFS, etc). The volumes can be directly mounted into Docker containers. The Docker volume solves the problem of storing persistent data of Docker containers. The Docker Volume of vSphere not only simplifies storage configuration, the volumes can also be associated with the Storage Policy Based Management (SPBM) of vSphere. For example, an administrator can set Fault To Tolerant (FTT) or Stripe Width (SW) of the data volume. Volumes with SPBM can achieve a higher data protection level and better performance. The docker volume driver of vSphere is an open-source project. It is downloadable at https://github.com/vmware/docker-volume-vsphere .

This blog walks through the steps of creating data volumes in VMware Virtual SAN (VSAN). As an example of a containerized application, the open source Harbor Registry is used to describe the usage of data volumes provisioned by VSAN, through which Harbor Registry achieves a higher data protection level and high availability (HA).

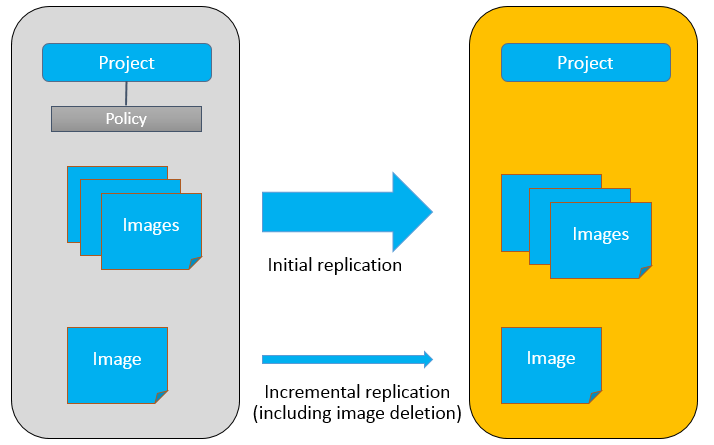

A little more background about Harbor Registry: it is another open-source project by VMware. A registry is one of the necessary components of a container’s build-ship-run lifecycle. Harbor helps users set up an enterprise private Docker registry service rapidly. Furthermore, it also provides enhanced features usually required by enterprises such as graphical user interface (GUI), role based access control, AD/LDAP integration and image replication. Harbor’s Github repo: https://github.com/vmware/harbor .

The architecture of the system is illustrated in the above figure. 3 ESXi hosts form a VSAN cluster. A Harbor registry VM is running on one of the hosts. Besides, there are three external Docker volumes created in the VSAN cluster, used for storing persistent data in Harbor. This cluster provides consolidated storage by local disks of each host. It can tolerate a failure of one physical host and still preserve data integrity and accessibility.

The architecture of the system is illustrated in the above figure. 3 ESXi hosts form a VSAN cluster. A Harbor registry VM is running on one of the hosts. Besides, there are three external Docker volumes created in the VSAN cluster, used for storing persistent data in Harbor. This cluster provides consolidated storage by local disks of each host. It can tolerate a failure of one physical host and still preserve data integrity and accessibility.

The configuration process is discussed as follows.

1. First, set up a Virtual SAN cluster with 3 ESXi hosts. A photon OS VM ( https://vmware.github.io/photon/ ) is installed on one of the ESXi server as a Docker host. Of course, other Linux distributions like Ubuntu can be used as well, as long as it can run Docker Engine and Docker Compose.

2. On the release page of Docker Volume Driver for vSphere project (https://github.com/vmware/docker-volume-vsphere/releases), download the plugin for ESXi host and for VMs respectively. For example, for 1.0 beta, the file names are:

2. On the release page of Docker Volume Driver for vSphere project (https://github.com/vmware/docker-volume-vsphere/releases), download the plugin for ESXi host and for VMs respectively. For example, for 1.0 beta, the file names are:

vmware-esx-vmdkops-1.0.beta.zip

docker-volume-vsphere-1.0.beta-1.x86_64.rpm

3. On each of the ESXi hosts, use the following commands to install the plugin (SSH of ESXi host must be enabled). After installation, no reboot is required.

# esxcli software vib install -d "/vmware-esx-vmdkops-1.0.beta.zip" \

--no-sig-check –f

Installation Result

Message: Operation finished successfully.

Reboot Required: false

VIBs Installed: VMWare_bootbank_esx-vmdkops-service_1.0.0-0.0.1

VIBs Removed:

VIBs Skipped:

4. On the Photon VM, install the RPM package. For other Debian based OS, install the corresponding deb package.

# rpm -ivh docker-volume-vsphere-1.0.beta-1.x86_64.rpm

Preparing... ##################### [100%]

Updating / installing...

1:docker-volume-vsphere-0:1.0.beta-############################ [100%]

File: '/proc/1/exe' -> '/usr/lib/systemd/systemd'

Created symlink from /etc/systemd/system/multi-user.target.wants/\

docker-volume-vsphere.service to /usr/lib/systemd/system/

docker-volume-vsphere.service.

5. After the ESXi plugin is installed, a management script is generated at /usr/lib/vmware/vmdkops/bin/vmdkops_admin.py. This script helps administrators manage the data volumes. For example, an administrator can create different storage policies. In Virtual SAN, the default storage policy has a Stripe Width setting of 1 (SW=1). We will create a new policy with SW=2 as an example.

To do this, just SSH into any of the ESXi hosts and run this command:

# /usr/lib/vmware/vmdkops/bin/vmdkops_admin.py policy \

create --name SW=2 --content '(("stripeWidth" i2))'

The parameter ‘SW=2’ is the name of the policy. The key point here is to set the content of the policy and it is ‘((“stripeWidth” i2))’ in this example. Other settings are the same as the Virtual SAN policy parameters. The possible parameters and their description are as follows: 6. Now Docker volumes can be created on the Docker host (the Photon OS VM). As an example, we first create two volumes with default storage policy and then create another volume with the newly created ‘SW=2’ policy.

6. Now Docker volumes can be created on the Docker host (the Photon OS VM). As an example, we first create two volumes with default storage policy and then create another volume with the newly created ‘SW=2’ policy.

# docker volume create --driver=vmdk --name=vsanvol1 -o size=50gb

vsanvol1

# docker volume create --driver=vmdk --name=vsanvol2 -o size=20gb

vsanvol2

# docker volume create --driver=vmdk --name=vsanvol3 -o size=20gb \

-o vsan-policy-name=SW=2

vsanvol3

By specifying the ‘–driver=vmdk’ parameter, the external volume is created in the vSphere datastore. The volume is created in the same datastore where the Photon OS VM resides. In this example the Photon OS VM is stored in Virtual SAN, so are the Docker volumes. These volumes are stored in the form of VMDK. What is noteworthy here is that the volumes are not mounted to any VM by now. So if we navigate to the vSphere Web Client, we cannot find any information about these newly created volumes from the VM’s page. However, we can indeed find them in the dockvols directory in the Virtual SAN datastore.

However, we can indeed find them in the dockvols directory in the Virtual SAN datastore. In subsequent sections, we are able to find the VMDKs through the VM’s page when the volumes are mounted to running containers.

In subsequent sections, we are able to find the VMDKs through the VM’s page when the volumes are mounted to running containers.

7. On the Photon OS VM, download the Harbor Registry source code. Before installing Harbor, we need to modify the harbor/Deploy/docker-compose.yml configuration file in order to use the newly created external volumes. We can then install Harbor by following the official Harbor installation guide.

Open the docker-compose.yml file. Find the ‘registry’ section, modify these lines:

volumes:

- /data/registry:/storage

- ./config/registry/:/etc/registry/

to

volumes:

- vsanvol1:/storage

- ./config/registry/:/etc/registry/

vsanvol1 is the external volume we just created.

Next, look for the ‘mysql’ section and modify these lines:

volumes:

- /data/database:/var/lib/mysql

to

volumes:

- vsanvol2:/var/lib/mysql

Similarly, vsanvol2 is another volume we just created.

Next, look for the ‘jobservice’ section and modify these lines:

volumes:

- /data/job_logs:/var/log/jobs

- ./config/jobservice/app.conf:/etc/jobservice/app.conf

to

volumes:

- vsanvol3:/var/log/jobs

- ./config/jobservice/app.conf:/etc/jobservice/app.conf

Similarly, vsanvol3 is another volume we just created.

In the end of the file, add the following lines:

volumes:

vsanvol1:

external: true

vsanvol2:

external: true

vsanvol3:

external: true

These lines indicate that these volumes have already been created and do not need to be created by Docker again. Keep other configurations unchanged in the docker-compose.yml. Then install Harbor as the official guide and bring up Harbor registry service.

8. After Harbor is running, we can check the vSphere Web Client and confirm that these 3 external volumes are indeed mounted to the Photon OS VM. They are mounted as ‘Hard Disk 2’,‘Hard Disk 3’ and ‘Hard Disk 4’ in the VM respectively. In this beta version, there seems some bugs about displaying storage policy. For example, the storage policies for these VMDKs are displayed as ‘None’ while we can see that ‘Hard Disk 3’ is created as ‘SW=2’ policy and the other two VMDKs are created with the default storage policy. The below screenshot shows a storage policy of ‘Hard Disk 4’: There may be a problem where Virtual SAN cannot identify the storage policy created by ‘” Docker Volume Driver for vSphere’ correctly. This problem should be solved in newer version.

There may be a problem where Virtual SAN cannot identify the storage policy created by ‘” Docker Volume Driver for vSphere’ correctly. This problem should be solved in newer version.

9. Let’s upload two images to test if there is any data loss when a host fails. 10. Enable vSphere HA on this Virtual SAN cluster, with default HA settings. Then we identify that the Photon OS VM is on the ESXi host with IP address 10.162.102.130.

10. Enable vSphere HA on this Virtual SAN cluster, with default HA settings. Then we identify that the Photon OS VM is on the ESXi host with IP address 10.162.102.130. 11. Power off the physical host with IP address 10.162.102.130. Wait for a while after HA restarts the VM and check the state of Photon OS VM.

11. Power off the physical host with IP address 10.162.102.130. Wait for a while after HA restarts the VM and check the state of Photon OS VM. The VM has been restarted on another heathy host. The original external volumes are mounted to the restarted VM. Because a host of the VSAN cluster is powered off, for each VMDK there will be a component shown as ‘absent’. However, with the default storage policy Virtual SAN can tolerate a host’s failure, so the access to the data is still successful.

The VM has been restarted on another heathy host. The original external volumes are mounted to the restarted VM. Because a host of the VSAN cluster is powered off, for each VMDK there will be a component shown as ‘absent’. However, with the default storage policy Virtual SAN can tolerate a host’s failure, so the access to the data is still successful.

12. After Photon VM is restarted, check the status of Harbor. All the services and containers are running as normal. 13. Check Harbor UI, the 2 images we uploaded before are still intact. This indicates that there is no data loss.

13. Check Harbor UI, the 2 images we uploaded before are still intact. This indicates that there is no data loss. When vSphere HA restarted the Harbor VM on another healthy host, all the containers of Harbor are also restarted. They are connected to the original same volumes as in the figure:

When vSphere HA restarted the Harbor VM on another healthy host, all the containers of Harbor are also restarted. They are connected to the original same volumes as in the figure: This blog introduces an example of achieving Harbor registry HA by leveraging Virtual SAN and vSphere HA. Since Harbor is a multi-container application, this approach can also be applied to other container-based applications.

This blog introduces an example of achieving Harbor registry HA by leveraging Virtual SAN and vSphere HA. Since Harbor is a multi-container application, this approach can also be applied to other container-based applications.

Related posts:

Architecture of Harbor: An Open Source Enterprise-class Registry Server

Working with Harbor Registry REST API via Swagger